Neural networks (AKA NNs) are systems based off of our brain's processes with neurons where they process information that is labeled, allowing it to discover patterns in the data, before taking in never before seen data and categorizing it. For neural networks, you only give it data and categories, and the network discovers patterns and uses those to predict what the category probably is. Neural networks have many different possible applications, such as image recognition, which can be applied to medical diagnoses and understanding handwirting, data mining, game playing, and machine translations.

For this project, I decided to attempt a neural network that would be able to classify images of food into 11 unique food groups - Bread, Dairy Products, Dessert, Egg, Fried Food, Meat, Noodles/Pasta, Rice, Seafood, Soup, and Vegetable/Fruit. These groups came as a part of the dataset, which I will discuss more in depth below. For this project, my end goal was to create a basic iOS app that would be able to take a photo, and then return the group that the food was belonging to. However, I decided to make it a web application instead, and you can upload your own image and test it out if you want!

This project used many libraries and packages, such as TensorFlow, Flask, Keras, and many more. This is traditional for machine learning, and one of the main reasons that I wanted to complete this project - to increase my exposure to machine learning. In addition, this was definetly the hardest project I tackled so far, but I am proud of completing it. I hope that you enjoy reading this post!

Some big questions that I'm hoping to answer in this project are

Before starting the project, I decided to learn TensorFlow, and some basic ways to deal with fixing the model. Through my AP Computer Science class, and some research on my own, I had basic knowledge on neural networks, and how they worked at a conceptual level, but entering this project, my practical knowledge on the subject was lacking. After looking through Kaggle at notebooks which created neural networks, I came across a TensorFlow walkthrough for which you could code up a very basic neural network for classifying dogs and cats. Through this course, I built a very basic machine learning model, and learned different ways of preventing overfitting, such as data augmentation and dropout. I used these techniques to create my model, along with looking at Stack Overflow and Kaggle for any more effective ways to change my model.

Going into this project, I knew that I wanted to do food classification. Initially, I chose to work on this dataset, also known as Food-101. This dataset was named after its photos of 101 different types of food. However, after doing various testing with the dataset, I couldn't get the accuracy above 10%, which is relatively good given that there were 101 possiblities, but that wasn't close to the accuracy I was hoping for out of this project. In addition, the dataset wasn't split between train and test categories, and as a result, it is extremely difficult to check the accuracy or train the model. As a result, I pivoted to a simpler classification which would provide me more accuracy, using this dataset instead. This dataset gave unnecessary categories to the test and evaluation datasets, so I made sure not to use them. This second dataset was the final dataset that I used.

This data required no parsing (yay!), so I could immediately jump into creating the model. After importing the necessary libraries (tensorflow, numpy, matplotlib.pyplot, etc.) along with some specialized parts of libraries to create the model (Sequential, Dense, Flatten, etc.), I started to create the model. I created train and validation generators, and created a dictionary assigning the numerical value to the category that it belonged to, in order to print out the category instead of just the number. Finally, I printed 5 images with their respective categories in order to check how the image would appear.

Initially, I decided to create a model from scratch, with a mix of 2DConvolution, Dense, and Flatten layers. However, I decided to test various image processing models that have already been created, such as the VGG-16, ResNet50, and InceptionV3 models, and tested the accuracy on each of them. Based on my tests, I decided to go with the VGG-16 model. Afterwards, I changed the number of epochs (round of testing), steps per epoch, and other factors, eventually landing upon an accuracy of 63%. There definetly is a potential that changing the various factors, or even having one of the aforementioned models with specific values could increase the accuracy a lot, but for now, I chose to stick with this accuracy to publish the model. Eventually, I plan on going back and changing up the variables in order to increase the accuracy, but for now, I decided that I was happy with my progress.

At this point, the model only worked in the context of my Kaggle notebook. To make it "callable" from the outside world, I had to figure out how to expose the model. This entailed learning about TensorFlow (TF) serving - a way for TF models to be exposed as APIs (Application Programing Interfaces). TensorFlow serving itself is a Docker container, so I had to learn the basics of Docker and how to deploy containers. I learned about how to use it from this TensorFlow article.

I downloaded the model from Kaggle, along with Docker, an application that can create containers to store local web applications on your computer in various ports. In addition, I downloaded TensorFlow, Keras, and Flask to my local machine, which was necessary in order to run the model locally. It took a long process to download, due to various unexplained errors that Kaggle presented me, but eventually, I managed to get them on my computer, and sucessfully get it to work locally. The next step was to get it to work by putting it on the internet, so that regardless of your location, you can use it.

I set up a Linode container, on top of which I again installed TensorFlow, Keras, and Flask. I had to download Docker on the server, and set it up similarly to what I did in the previous step, so that my model would be accessible through a public IP address instead of solely on my localhost.

If you want to try out my model, please click here - it will take you to the web interface of my model. I have also attached some photos of the interface with an example below.

This is a photo of the first screen when you click on the link to go to the model - you can choose to upload a photo from your computer, or, if you are on mobile, it will open your photo library.

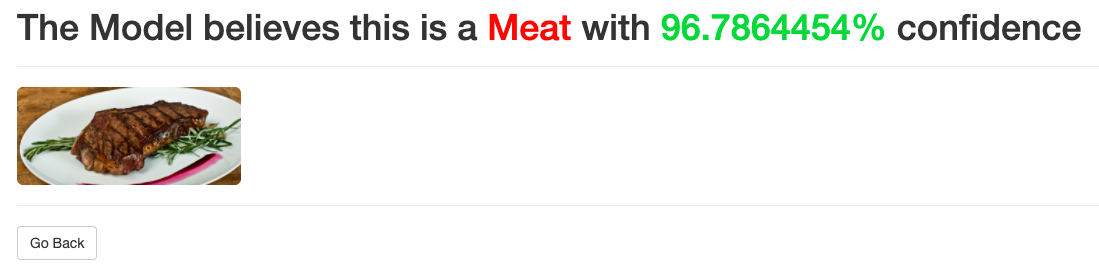

This is a photo of a successful classification with the model. In this case, the model is trying to classify a photo of steak, which was correctly put in the category of "Meat" with over 96% confidence! :D

This project pushed me to my limits, as I had to learn a lot of it and create everything from scratch. However, I am very happy with the final product, and hope that you enjoy it as well! Below are some takeaways that I had after coding up my first NN.

Main Takeaways

All in all, this project was a great introduction to NNs, and I am looking forward to making more comprehensive projects with NNs. Thank you so much for reading this post - I hope that you enjoy!